Confirmation bias: how self-deception affects our decisions

Political debates, public interest issues, medical diagnosis, conspiracy theories, entrepreneurship: these are just a few of the areas affected by our tendency to preserve personal identity by confirming existing prior ideas that reflect our social group or personal beliefs (Brain Week, 2018).

This phenomenon describes people’s tendency to favor information that validates preconceptions, assumptions, and personal beliefs, regardless of the veracity of such information. Confirmation bias was first acknowledged and named in the 1960s by Peter Wason, a cognitive psychologist at University College London and a pioneer in the psychology of reasoning (The Decision Lab, 2020).

The reasons behind this bias can be linked to the need to avoid cognitive dissonance and help maintain and confirm our sense of self-identity (Festinger, 1957). Confirmation bias is nothing more than a cognitive shortcut we resort to when gathering and interpreting information. Since evaluating evidence takes time and energy, our brains automatically look for such mental shortcuts to make the process more efficient.

What we do actually makes a lot of sense. People need to process information quickly but incorporating new information and forming new explanations or beliefs takes a longer time. That is the reason why we have adapted to prefer the option that requires the least effort, often out of necessity.

Confirmation bias in history

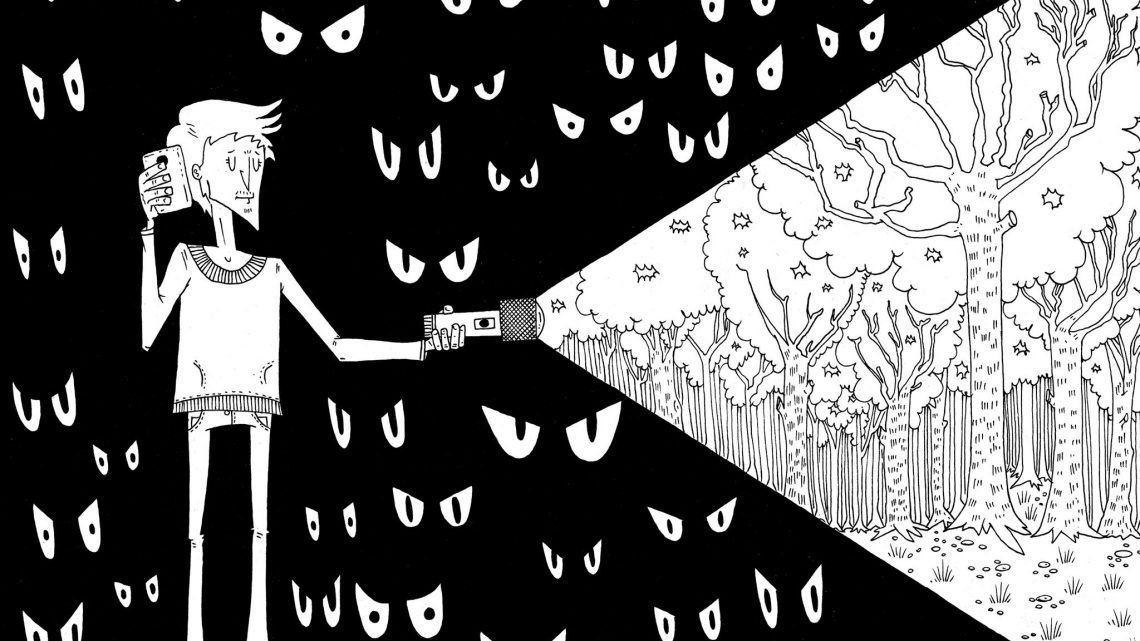

This phenomenon might seem more evident and widespread today, as we are exposed to a substantial amount of information online. We are also adapting to this information splurge – as algorithms are trained to selectively guess what information we would like to see based, among other things, on past click-behavior or search history So, we become separated from information that disagrees with our viewpoints. But the truth is that the phenomenon has always existed.

Confirmation bias was already known back in Ancient Greece. Thucydides (460 B.C. – 395 B.C.), historian and among the leading exponents of Greek literature, remarked that ” […] it is a habit of [hu]mankind to entrust to careless hope what they long for, and to use sovereign reason to thrust aside what they do not fancy.”

In 1620, the English philosopher Francis Bacon, in his book Novum Organum Scientiarum, stated that “human understanding, when it has once adopted an opinion draws all things … to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises or … sets aside and rejects in order that by this great and pernicious predetermination, the authority of its former conclusions may remain inviolate.“

There is plenty of further literary evidence regarding the fact that the concept of confirmation bias has always existed, such as that of Marcel Proust, author of In Search of Lost Time, who suggested that jealousy itself is a consequence of confirmation bias: “It is astonishing how jealousy, which spends its time inventing so many petty but false suppositions, lacks imagination when it comes to discovering the truth.“

Confirmation bias in the current context

Confirmation bias can help us explain several phenomena, such as why racist or sexist stereotypes endure over time. A sexist person may overlook all kinds of empirical evidence about different genders being equally good at math or equally able to take care of their children, as it is much quicker to recognize more isolated or uncommon cases that confirm their stereotyped, prior ideas.

Similarly, a racist person may not notice all the people of different ethnicity who work hard to support their families, pay taxes and behave as honest citizens: they will be paying much more attention to isolated cases of misconduct in general, when performed by a minority, while overlooking the same misconduct performed by people who belong to their own ethnic group.

This bias also explains why some conspiracy theories are successful even when they are proven to be based on falsehoods and absurdities. The typical proponent of a conspiracy thesis will spend time defending and disseminating crumbs of empirical evidence in support of their theory, while leaving out all the evidence that disproves it (Angner, 2017).

Consider what happened with the ongoing (at the time of writing) pandemic. Roberta Petrino, head of the Department of Acceptance and Emergency Medicine and Surgery of the Asl of Vercelli, said to Italian newspaper, La Stampa, “We had to deal with patients who, although tested positive and were suffering from clear symptoms of COVID-19, they still claimed that it was not covid. They interpreted our medical intervention almost as a constraint. Fortunately, it was very few cases, but it happened.“

The case of science denial is not new, and we recommend an interesting reading on why and how it happens so frequently [Link: https://theconversation.com/science-denial-why-it-happens-and-5-things-you-can-do-about-it-161713]

Scientific evidence of confirmation bias

An important study conducted by researchers at Stanford University in 1979 explored the psychological dynamics of confirmation bias (Lord, Ross, & Lepper, 1979).

The study involved undergraduate students with opposing views on the topic of capital punishment who were asked to evaluate two fictitious studies on the topic. One of the fictitious studies provided to the participants offered them data to support the argument that capital punishment deters crime, while the other supported the opposite viewpoint, that capital punishment has no appreciable effect on overall crime in the population.

Although both scenarios were based on fake, invented data purposely designed by the researchers to present “equally compelling” objective statistics, the researchers found that the responses to the studies were split based on participants’ pre-existing views. Participants who initially held the pro-capital punishment argument viewed the contrary data as unconvincing and found the data supporting their position more credible; participants who initially held an anti-capital punishment opinion reported the same, but in support of their position against capital punishment.

Then, after being confronted with both the evidence supporting capital punishment and the evidence refuting it, both groups reported feeling even more committed to their original position. The fact that their position had been challenged even had the effect of re-rooting existing beliefs.

A very interesting scientific essay on the subject was subsequently published by Raymond S. Nickerson in 1998 in the journal Review of General Psychology under the title “Confirmation Bias: An Ubiquitous Phenomenon in Many Guises.”

The author reported historical evidence bursting with confirmation bias, citing as an example the witch-hunt and slowing down of discoveries in the field of medicine due to the most popular medical ideas, sometimes very far from scientific medicine, concluding that “If one were to attempt to identify a single problematic aspect of human reasoning that deserves attention above all others, the confirmation bias would have to be among the candidates for consideration.”.

Even the domain of science, where theories should advance based on refutation and supporting scientific evidence, is not immune to this type of bias. Indeed, cases in which hypotheses are crafted so that they can be easily confirmed rather than refuted, a phenomenon known as the replication crisis, are not uncommon (Oswald & Grosjean, 2004).

Implications of confirmation bias

Some philosophers and scientists seem optimistic and argue that confirmation bias may, in fact, have benefits for the individual, groups, or both, such as the ability to navigate social reality more easily and efficiently.

Others, however, are more pessimistic. For example, Dutilh Novaes (2018) argues that confirmation bias makes individuals less able to anticipate others’ points of view, and, thus, less able to appreciate their interlocutor’s perspective for the purposes of a constructive social interaction.

Confirmation bias has evolved over the years, or at least the effects that this cognitive bias brings with it have evolved (in terms of scope), especially considering the context in which we live, increasingly “social” from the digital point of view. Recent scientific studies have identified a relationship between some cognitive biases and the emotional sphere of misinformation, concluding that confirmation bias is among the mental biases that make individuals more prone to the influence of fake content and play a crucial role in its diffusion (Scientific American, 2018).

As mentioned above, the so-called “filter bubble effect” is another example of a social phenomenon that amplifies and facilitates our cognitive tendency toward confirmation bias.

The term was coined by Internet activist Eli Pariser to describe the intellectual isolation that can occur when websites and social networks use algorithms to predict what information a user would like to see and then provide information to the user based on that prediction.

This means that such networks are more likely to provide us with the content we prefer, excluding content that our browsing patterns have shown to be contrary to our preferences. We normally prefer content that confirms our beliefs because it requires less critical thinking, and, thus, bubble filters favor disseminating information that confirms existing options to the exclusion of different or contrary evidence.

And so, for example, conservatives will tend to read conservative newspapers or blogs that post content supporting conservative ideas and progressives, conversely, will read progressive newspapers or follow blogs that support their ideas (Angner, 2017).

As a result of this process, users increasingly tend to aggregate into rather closed communities of interest that, through constant reinforcement, foster segregation and polarization. All this is to the detriment of the quality of information and increases the proliferation of distorted narratives fomented by unfounded rumors, distrust and paranoia (Del Vicario et al., 2016).

Filtering bubbles on social media have also been shown to influence even political elections by adapting (through targeted algorithms) the content of campaign messages and political news according to different subsets of voters, thereby leading to a reduction of constructive democratic discussion and entrenchment of political opinions because of an inaccurate information flow of evidence supporting them (The Decision Lab, 2020).

Knowing the phenomenon to be able to counter it

Scientific research in the field of psychology suggests that this cognitive distortion is due to several factors. First, people often tend not to see empirical evidence that contradicts their ideas, while they are much more likely to find evidence that confirms them. Second, when the evidence is not as clear or is ambiguous, and lends itself to dual interpretations, people naturally tend to prefer interpretations that are in line with their own thinking. Finally, people are stricter about the criteria by which they accept evidence that can refute their ideas than they are when faced with evidence that can confirm them (Angner, 2017).

A study conducted using magnetoencephalography (MEG) and recently published in Nature Communications found that ignoring evidence against one’s position is a process particularly evident when subjects are extremely confident in their decisions. According to the research, high levels of confidence lead to a striking modulation of post-decision neural processing, such that the processing of evidence confirming one’s hypothesis is amplified, while the processing of disconfirming evidence is greatly reduced, if not abolished.

If the study identifies overconfidence as a facilitator of the cognitive distortion caused by confirmation bias, it is equally true that metacognitive processes, i.e., the ability to be aware of and able to control cognitive processes, are the weapons we can and should equip ourselves with to counter and limit the effects of this cognitive distortion of which we are often victims without realizing it (Rollwage et al., 2020).

References

- Angner, E. (2017). Economia Comportamentale: Guida alla Teoria della Scelta. Hoepli.

- Del Vicario, M., A. Scala, G. Caldarelli, H. E. Stanley, and W. Quattrociocchi. (2017). Modeling Confirmation Bias and Polarization. Scientific Reports 7: 40391.

- Dutilh Novaes, C. (2018). The enduring enigma of reason. Mind & Language, 33(5), 513-524.

- Festinger, L. (1957). A Theory of Cognitive Dissonance. Palo Alto, CA: Stanford University Press.

- La Settimana del Cervello (2018). “Vedi che è come dico io? Il bias di conferma”. https://www.settimanadelcervello.it/bias-di-conferma/

- LaStampa (12 Novemnre 2020). “Negazionisti malati al Pronto soccorso di Vercelli: “Soffrono per il Covid, ma dicono che non esiste”. https://www.lastampa.it/topnews/edizioni-locali/vercelli/2020/11/12/news/la-responsabile-del-pronto-soccorso-di-vercelli-negazionisti-anche-tra-i-contagiati-che-soffrono-per-il-covid-1.39527892.

- Lord, C. G., Ross, L., & Lepper, M. R. (1979). Biased assimilation and attitude polarization: The effects of prior theories on subsequently considered evidence. Journal of personality and social psychology, 37(11), 2098.

- Michetti, F. (2020). “Fake news, ecco perché abbocchiamo (e come evitarlo)” in Agenda Digitale.eu. https://www.agendadigitale.eu/cultura-digitale/fake-news-ecco-perche-abbocchiamo-e-come-evitarlo/.

- Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of general psychology, 2(2), 175-220.

- Oswald, M. E., & Grosjean, S. (2004). Confirmation bias. Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory, 79.

- Rollwage, M., Loosen, A., Hauser, T. U., Moran, R., Dolan, R. J., & Fleming, S. M. (2020). Confidence drives a neural confirmation bias. Nature communications, 11(1), 1-11.

- The Decision Lab. (2020). “Why we interpret information favoring our existing beliefs”. https://thedecisionlab.com/biases/confirmation-bias/?fbclid=IwAR2s-pclOsMUDvQrVsrsQsKGFRoOPSovEWFLaTyo374EoADPyVefCCc1e8Q